School of Computing and Mathematical Sciences

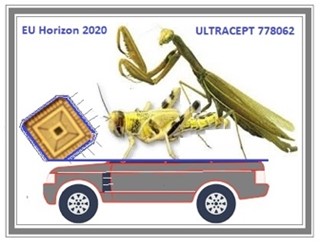

ULTRACEPT

Ultra-layered perception with brain-inspired information processing for vehicle collision avoidance.

Ultra-layered perception with brain-inspired information processing for vehicle collision avoidance.

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie grant agreement number no 778062.

The ULTRACEPT project started on 1 December 2018. It was scheduled to run for 4 years until 30 November 2022, however, due to COVID related delays, the project was extended 22 months and is now scheduled to end 30 September 2024.

About ULTRACEPT

The primary objective of this project is to develop a trustworthy vehicle collision detection system inspired by animals’ visual brain via trans-institutional collaboration. Processing multiple modalities sensor data inputs, such as vision and thermal maps with innovative visual brain-inspired algorithms, the project will embrace multidisciplinary and cross-continental academia and industry cooperation in the field of bio-inspired visual neural systems via neural physiological experiments, software simulation, hardware realisation and system integration. The innovative brain-inspired collision detection system has the advantages of low-cost spatial-temporal and parallel computing capacity of visual neural systems and can be realised in chips specifically for collision detection, both in normal and in complex and/or in extreme environments.

This project brings together 19 world-class research teams and European SMEs each with specialised expertise:

- invertebrate visual neuroscientists (UNEW and UBA on the locust and crab)

- invertebrate vision modellers (University of Leicester, ULEIC)

- cognitive neural system modellers (XJTU, GZHU and GZU)

- mixed-signal VLSI chip designers (TU)

- experts in hardware/software systems and robotics (UHAM, HUST, TUAT, LNU)

- robotics platform provider and market explorer (VISO, DINO)

- image signal processing (UPM, NWU)

- brain-inspired pattern recognition expert (CASIA, WWU)

The specifically designed research staff secondments, joint workshops and conferences, will facilitate knowledge transfer between partners and generate significant impact in vehicle collision avoidance research with applications to not only on road safety, but also to other applications such as mobile robots, health care and the video games industry.

The project will cultivate concrete research connections between institutions in Europe, the Far East and South America, and will significantly enhance the leadership of the European Institutions in this emerging research field.

The European SMEs will have opportunities to access world-leading expertise in research and development and position themselves well as a market leader in automatic collision detection sensor and systems, which in turn also help to expand its market share in intelligent sensor systems.

Partners

The 19 ULTRACEPT project partners are listed below.

- University of Leicester (ULEIC), UK Following the relocation of Professor Shigang Yue from UoL to ULEIC, ULEIC joined the consortium as a project partner and took over the role as project Coordinator from the 15 June 2023

- University of Lincoln (UoL), UK Project Coordinator from 1 December 2018 to 14 June 2023

- University of Hamburg (UHAM), Germany

- University of Newcastle (UNEW), UK

- Westfaelische Wilhelms-Universitaet Muenster (WWU), Germany

- Visomorphic Technology Ltd (VISO), UK

- Dino Robotics GMBH (DINO), Germany

- Tsingua University (TU), China

- Xi’an Jiaotong University (XJTU), China

- Huazhong University of Science and Technology (HUST), China

- Northwestern Polytechnical University (NWPU), China

- University of Buenos Aires (UBA), Argentina

- National University Corporation Tokyo University of Agriculture and Technology (TUAT), Japan

- University Putra Malaysia, Malaysia

- Lingnan Normal University, China

- Institute of System Science and IT (ISSIT), Guizhou University, China

- Institute of Automation Chinese Academy of Sciences, China

- Guangzhou University, China

- Agile Robots, Germany

Workshops/training seminars

- Workshop 1 (kick off), GZHU, 23 Jan 2018

- Workshop 2 (Teams), 28 July 2020

- Training seminar (Teams), 29 July 2020

- Workshop 3 (hybrid, Teams, GZHU), 25-26 March 2021

- Workshop 4, UHAM, 25-26 Oct 2021

- Workshop 5, ULEIC, 23 August 2024

Meetings

- Kick off meeting, Jan 2019

- Mid-term review meeting, Feb 2020

- ULTRACEPT annual board meeting, March 202

Dissemination (recent)

Publications

- Zhang J, Jiang X. (2025). Evolutionary training set pruning for boosting interpolation kernel machines. InPattern Recognition Applications and Methods, Springer. [accepted / in Press (not yet published)]

- Zhang J, Jiang X. (2024). Regularization of interpolation kernel machines. In Proc. of ICPR. [accepted / in Press (not yet published)]

- Zhang J, Jiang X. (2024). Classification performance boosting for interpolation kernel machines by training set pruning using genetic algorithm. In M. Castrillon-Santana, M. De Marsico, A. Fred (Eds.): Prof. of ICPRAM, pp. 428–435. Setúbal: SciTePress.

- Zhang J, Liu CL, Jiang X. (2024). Polynomial kernel learning for interpolation kernel machines with application to graph classification. Pattern Recognition Letters, 186, 7–13.

- Manongga WC, Chen RC, Jiang X. (2024). Enhancing road marking sign detection in low-light conditions with YOLOv7 and contrast enhancement techniques . International Journal of Applied Science and Engineering, 21(3), 2023515.

- Dewi C, Chen RC, Yu H, Jiang X. (2023). XAI for image captioning using SHAP . Journal of Information Science and Engineering, 39, 711–724.

- Wang Hongxin, Zhong Zhiyan, Lei Fang, Peng Jigen, Yue Shigang. (2023). Bio-inspired small target motion detection with spatio-temporal feedback in natural scenes. IEEE Transactions on Image Processing. 33, 451-465. DOI: 10.1109/TIP.2023.3345153

- Sun Xuelong, Hu Cheng, Liu Tian, Yue Shigang, Peng Jigen and Fu Qinbing. (2023). Translating Virtual Prey-Predator Interaction to Real-World Robotic Environments: Enabling Multimodal Sensing and Evolutionary Dynamics. Biomimetics. 8(8), 580. DOI: 10.3390/biomimetics8080580

- Sun Xuelong, Fu Qinbing, Peng Jigen, Yue Shigang. (2023). An insect-inspired model facilitating autonomous navigation by incorporating goal approaching and collision avoidance. Neural Networks. 165, 106-118. DOI: 10.1016/j.neunet.2023.05.033

- Luan Hao, Hua Mu, Zhang Yicheng, Yue Shigang, Fu Qinbing. (2023). A BLG1 neural model implements the unique looming selectivity to diving target. Optoelectronics Letters, 19(2), 112-116. DOI: 10.1007/s11801-023-2095-0

Posters

- The synaptic complexity of a lobula giant neuron in crabs. Yair Barnatan, Claire Rind and Julieta Sztarker. XV International Congress of Neuroethology, Berlin, Alemania, 28 julio- 2 agosto, 2024.

- Cuing effects on praying mantis strikes are long-lasting and disparity dependent. Theo Robert, Emiliano Kalesnik Vissio, Siyuan Jing, Julieta Sztarker, Vivek Nityananda. XV International Congress of Neuroethology, Berlin, Alemania, 28 julio- 2 agosto, 2024.

Talks

- “The neuropil processing optic flow in mud crabs is the lobula plate: optomotor responses are severely impaired in lesioned animals” V International Conference on Invertebrate Vision, Bäckaskog Castle, Suecia, 27 Julio- 3 Agosto 2023.

- “From anatomy to function: finding optic flow processing centers in crabs with the help of flies.” seminario departamental en el CBE (Centre for Behaviour and Evolution - Newcastle University). 15 de Agosto 2023.

- “Diverse synaptic connectivity underlies the multiple physiological roles of a lobula giant neuron in crabs” in Minsymposium: Frontiers in Crustacean Neuroscience 05/08/24, Division of Neurobiology, Institute of Biology en la Freie Universität Berlin.

Contact

- Professor Shigang Yue (sy237@leicester.ac.uk) Computing and Mathematical Sciences, University of Leicester